In clinical trials, rework caused by inconsistent data structures, programming errors, or non - compliance with regulatory standards may delay submissions and increase budgets. By adopting proactive programming strategies, SAS developers and data managers can optimize work processes, reduce revisions, and ensure seamless transitions between trial phases. This article outlines specific methods for avoiding rework through practical cases and comparison tables.

1. The Costs of Rework in Clinical Trials

Rework usually stems from the following reasons:

· CRF Design (CRF Phase): The Case Report Form (CRF) is the primary tool for data collection in clinical research. The quality of CRF design directly impacts the quality of research data collection. A reasonable CRF design is closely related to data collection, entry, verification, and subsequent statistical analysis. Reviewing the CRF design from a data management perspective to make it more reasonable can greatly reduce the difficulty of later - stage data management and statistical analysis and improve research efficiency. If the pre - defined variable names in the CRF do not meet the submission requirements, a large amount of time will be spent in the subsequent programming and review stages. Once there are omissions, there is also a risk of rework.

· Non - compliance with CDISC Standards (SDTM/ADaM): Variable collection fails P21 validation. For example,

Calculation issue: AWTDIFF is populated, but AWTARGET and/or one of [ADY, ARELTM] are not both present and populated. It will prompt that you are lacking variables. Additionally, when working on SDTM.PP, PPRFTDTC/PPPTPTREF need to appear in pairs, which may lead to supplementary requests for data submissions. This not only consumes human and material resources but also delays the submission process.

There are many variables that need to appear in pairs like this. If you want to learn more, you can study SDTM - IG in detail.

· Inconsistent Variable Naming (Cross - phase): A project may go through multiple clinical trials. It is best to have a unified standard at the beginning of the design. In this way, most of the programs can be reused, and even if there are modifications, they will be simple. This can save a significant amount of time for our clinical submissions.

· Inadequate Preparation of Submission Documents (Submission Phase): Submitting materials without strictly following the submission format delays the review.

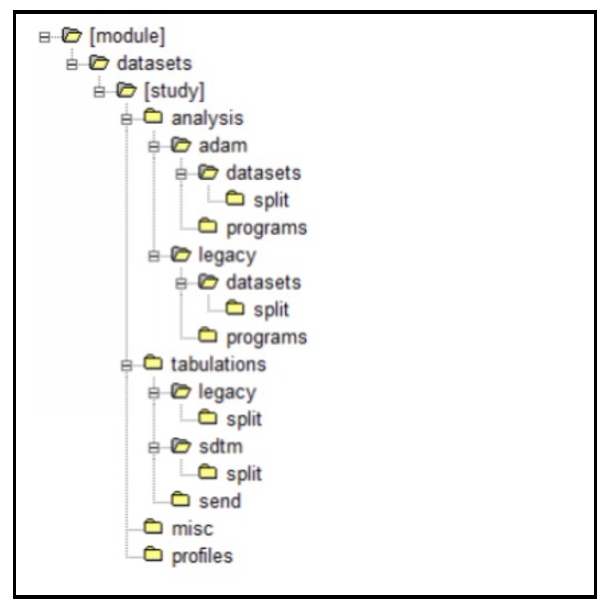

Figure 1:

Table 1: Common Causes of Rework and Their Impacts

Cause | Affected Phase | Average Time Consumption | Cost Multiplication Factor |

Inconsistent variable names | From SDTM to ADaM | 20 - 40 hours | 3× |

Protocol amendment | Entire submission phase | 50+ hours | 5× |

Inadequate document preparation | Submission phase | 100+ hours | 10× |

II. Intelligent Programming Strategies

(I) Core Principles and Optimization Strategies for CRF Question Setting:

1. Clarity and Unambiguity

o Questions should be stated clearly and understandably to ensure that all parties have a consistent understanding. Provide detailed filling instructions (which can be accompanied by illustrations). Place the instructions either after the questions or on the back of the CRF.

2. Comprehensiveness and Conciseness

o Include only the variables necessary for statistical analysis. Delete all redundant items to avoid data redundancy.

3. Splitting of Single Questions

o Avoid compound questions (such as "Do you smoke and drink?"). Split them into independent questions (such as "Do you smoke?" and "Do you drink?"), reducing logical confusion.

4. Priority of Structured Data

o Try to use numerical types (multiple - choice questions/direct numerical input) or date formats, and reduce text - type data to facilitate later - stage management and analysis.

5. Standardized Option Design

o Answer options should meet the following:

Completeness: Cover all possibilities and add fallback options such as "Other/Unknown/Not Applicable";

Mutual Exclusivity: Options should be independent and non - overlapping, ensuring that each patient corresponds to only one answer.

Objective: Improve data accuracy, integrity, and analysis efficiency through standardized design, and reduce the costs of data cleaning and interpretation.

(II) SAS Programs Assisting Manual Verification

1. Data verification is a task that involves a series of validation checks on the accuracy, integrity, logical consistency, and medical reasonableness of data. We generally conduct verification according to a data verification plan. Data verification includes electronic verification and manual verification.

Electronic verification, also known as logical verification, is a type of verification that can be set up in a database through electronic programming to identify data errors.

Manual verification refers to the verification that cannot identify data errors through electronic programming and requires human intervention. It generally includes offline SAS listing verification and manual verification listing verification.

For different problems, we can write different SAS programs to meet verification requirements. Here is an example:

For a certain Adverse Event (AE) verification, it is necessary to confirm the duration of the AE. If the start time of the second record with the same AE name and the end time of the first record are within one day, then the calculation of the duration should use the end time of the second record minus the start time of the first record. With tens of thousands of data records, it is obviously time - consuming and labor - intensive to conduct all manual verifications.

TEST-01-01002 | 13 | Abnormal liver function | Not recovered/not cured | 2018-12-03 | 2018-12-14 |

TEST-01-01002 | 17 | Abnormal liver function | Not recovered/not cured | 2019-01-02 | 2019-02-20 |

TEST-01-01002 | 15 | Abnormal liver function | Not recovered/not cured | 2018-12-14 | 2018-12-24 |

TEST-01-01002 | 12 | Abnormal liver function | Improving/Recovering | 2018-11-19 | 2018-12-03 |

TEST-01-01002 | 5 | Abnormal liver function | Not recovered/not cured | 2018-09-30 | 2018-10-30 |

TEST-01-01002 | 22 | Abnormal liver function | Improving/Recovering | 2019-02-20 | 2019-09-04 |

TEST-01-01002 | 9 | Abnormal liver function | Not recovered/not cured | 2018-10-30 | 2018-11-19 |

TEST-01-01002 | 16 | Abnormal liver function | Not recovered/not cured | 2018-12-24 | 2019-01-02 |

/* Example: Calculation AE during */

proc sort data=test; /*Ranking*/

by usubjid aedecod astdt aendt;

run;

data test1;

set test;

by usubjid aedecod astdt;

retain last_aendt group_start m;

/* Initialize the end date of the first record */

if first.aedecod then do;

group_start = astdt;

group_end = aendt;

last_aendt = aendt;

m=1;

end;

else do;

/* If the interval is less than or equal to 1 day, merge and update the end time of the group. */

if 0=<(astdt - last_aendt) <= 1 then do;

group_end = max(aendt, last_aendt);

last_aendt = group_end;

end;

/* If the interval is greater than or equal to 1 day, create a new group. */

else do;

m+1;

group_start = astdt;

group_end = aendt;

last_aendt = aendt;

end;

end;

run;

In this way, group by the same aedecod, take the last piece of information in each group, and then the AE duration can be calculated.

In actual verification, there are many programs with similar functions, which can help us save a great deal of time.

2. Automation of Time Variables

The date and time formats (such as the ISO8601 format) are inconsistent across different data sets, causing difficulties in data parsing and review.

Following the CDISC standard, all date - time variables (such as AESTDTC) should use the ISO8601 format: YYYY-MM-DDThh: mm.

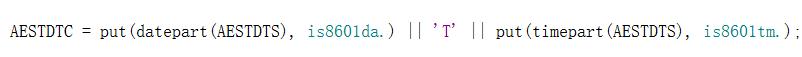

Format conversion

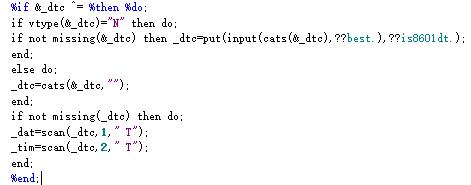

However, during actual use, the time of the original data collection may not be standardized. It may contain values like "UK" or null values. At this time, we need to handle different times differently, and the actual operation will also consume a lot of time.

If we write out all the situations and program them in the form of a macro, then in our subsequent use, we only need to call the macro program and modify the input and output variables.

/* Example: Standardize time for different time formats. */

The above is the code for processing time in different formats. Those who are interested can refer to it.

3. Modularize Code to Improve Reusability

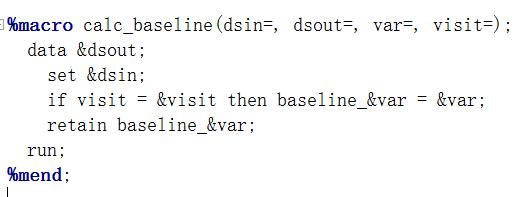

Split the program into reusable macros (such as endpoint calculation, data flagging, derived variables).

Example: A macro for calculating baseline values:

Table 4 Reusable Code Modules

Module type | Purpose | Applicable Phase |

Endpoint Calculation | Derive Efficacy Indicators | Phases 2 - 4 |

Data Flagging | Identify Safety Populations | All phases |

Merge Operations | Merge SDTM Domains to Construct ADaM | Phases 3 - 4 |

5. Anticipating Protocol Amendments

Protocol changes (such as adding new endpoints, population criteria) are inevitable. Coping strategies include:

· Parameterized assumptions (such as the length of the treatment window).

· Using dynamic code to adapt to new visit schedules.

Conclusion: Early Investment, Later Savings

Intelligent programming is not just about writing code; it's about building systems that can withstand protocol changes, regulatory reviews, and cross - phase requirements. By prioritizing standardization, automation, and document management, teams can significantly reduce rework, accelerate timelines, and focus resources on scientific innovation.

Final recommendation: Conduct "rework audits" at the end of each phase to optimize processes. Early - stage investment will yield exponential returns in later stages.

Next Steps Unsure how to rationally save time in the later trial phases?

Our team of biostatistical experts offers free strategy consultations, conducting in - depth diagnostics of your trial protocol design, statistical analysis plan compliance, data governance maturity, biostatistical resource allocation, and vendor capability gaps.

Contact Person: Suling Zhang, Vice President of International Operations and Business Development

Email: suling.zhang@gcp-clinplus.com

Tel.: +1 609-255-3581

GCP ClinPlus, a clinical research partner with 22 years of global delivery experience, has completed over 2,200 international multi - center clinical trial projects, successfully facilitating over 160 new drug approvals by the FDA, NMPA, and EMA.

With over 30 years of regulatory affairs experience from our US team, we provide a full - cycle biostatistics solution for each project that complies with ICH - GCP guidelines.